Hackathon EUMASTER4HPC Juillet 2023

Au cœur de ce hackathon, le panorama moderne de l’HPC Cloud et ses défis.

Dans le paysage actuel du HPC, l’utilisation efficace des ressources Cloud HPC nécessite une approche multifacette. Les utilisateurs doivent identifier les charges de travail appropriées pour le Cloud, créer des clusters Cloud HPC sur mesure et gérer ces environnements de manière intelligente. Le hackathon de l’École d’été EUMaster4HPC 2023 illustre ces défis, offrant aux participants une expérience pratique dans la sélection des charges de travail, la personnalisation des clusters et l’orchestration. Cet événement reflète le paysage plus vaste de l’HPC, préparant les individus à exceller à l’ère du Cloud HPC. À mesure que la technologie progresse, de tels hackathons deviennent cruciaux pour façonner l’avenir de l’informatique haute performance.

Le Hackathon EUMaster4HPC

Du 24 juillet au 28 juillet 2023, le premier Hackathon de l’École d’été EUMaster4HPC s’est déroulé à Grenoble INP – Ensimag, en France. Il a réuni 25 étudiants talentueux venant de toute l’Europe. Leur mission : porter le cadre de simulation GEOSX (une collaboration entre TotalEnergies, le Lawrence Livermore National Laboratory et la Stanford University) sur AWS EC2 Hpc7g en utilisant les outils Arm. Soutenu par AWS et un vaste réseau de partenaires, notamment NVIDIA, UCit, ARM et SiPearl, ce hackathon a marqué une étape importante dans la convergence de l’informatique haute performance (HPC) et du Cloud. Sous l’égide d’EUMaster4HPC, un consortium européen de l’HPC à la pointe de l’éducation en HPC, cet événement a mis en lumière la fusion entre le monde académique et l’industrie, démontrant le potentiel immense de l’HPC dans le cloud. Avec Amazon Web Services (AWS) en tant que participant clé, ce hackathon a inauguré une nouvelle ère d’innovation collaborative. Les étudiants se sont plongés dans GEOSX et des suites de tests, aboutissant à des présentations de leurs résultats et à des retours inestimables. Les solutions HPC Cloud d’UCit, exécutées sur AWS, ont fourni des ressources HPC dédiées à chacune des cinq équipes, tandis que GEOSX a trouvé un nouveau domicile sur AWS ARM Graviton3, témoignant de l’efficacité du portage et de la puissance du partenariat. Malgré les défis persistants, l’enthousiasme des étudiants et le soutien d’AWS et de ses partenaires ont prévalu, faisant de ce hackathon un succès retentissant, grâce aux efforts dévoués de Kevin Tuil, Conrad Hillairet, Benjamin Depardon, Jorik Remy et François Hamon.

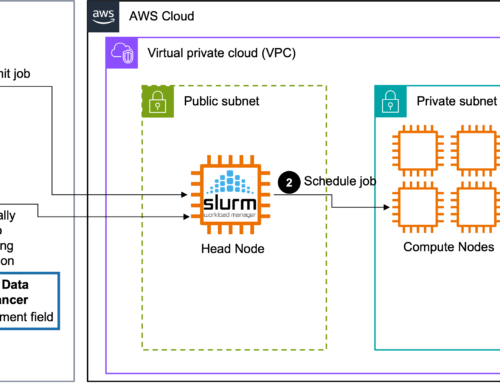

GEOSX, un outil innovant de simulation du stockage du carbone qui n’avait jamais été porté sur une architecture ARM

GEOSX est un logiciel open-source de pointe conçu pour relever le défi critique du stockage du dioxide de carbone (CO2), un aspect clé de la lutte contre le changement climatique. Il sert d’outil puissant pour la modélisation et la simulation des processus souterrains liés au stockage du CO2. Il aide à créer des conceptions de puits détaillées pour l’injection de CO2 en tenant compte de facteurs tels que la composition du puits, les caractéristiques de la roche et les voies potentielles de fuite de fluide. De plus, GEOSX contribue à prédire comment les fluides s’écoulent et comment les roches se cassent en profondeur sous terre, offrant des prédictions comportementales en 3D des réservoirs souterrains, essentielles pour évaluer leur aptitude au stockage à long terme du CO2. En divisant les simulations en sections gérables, GEOSX peut aborder la modélisation souterraine à grande échelle, permettant des prédictions s’étendant de quelques secondes à des milliers d’années dans le futur. Sa nature open-source et sa portabilité sur différentes plates-formes le rendent accessible à un large éventail d’utilisateurs, des ordinateurs portables standard aux supercalculateurs et aux plates-formes exascale. GEOSX représente une étape critique vers le développement de solutions efficaces de séquestration du CO2 et l’avancement de notre transition vers un avenir à faible émission de carbone.

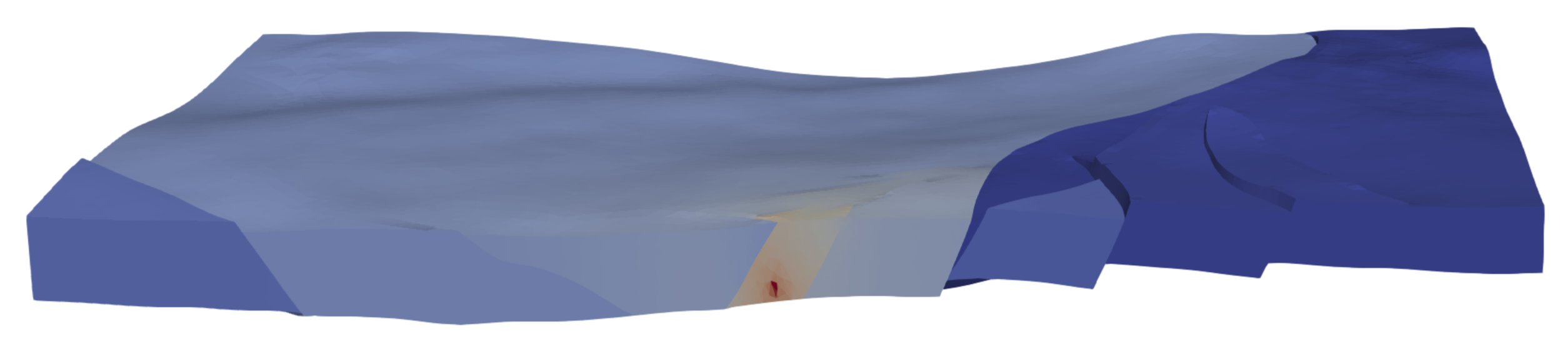

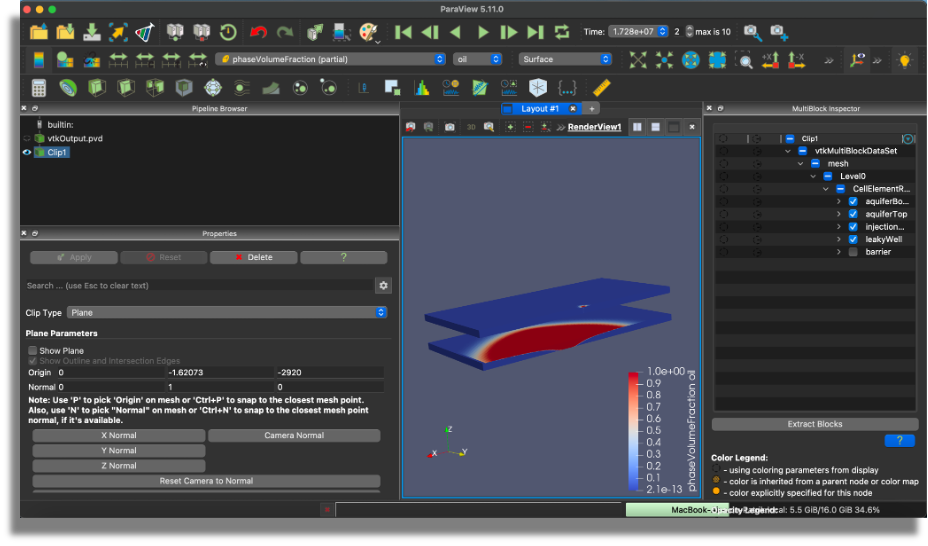

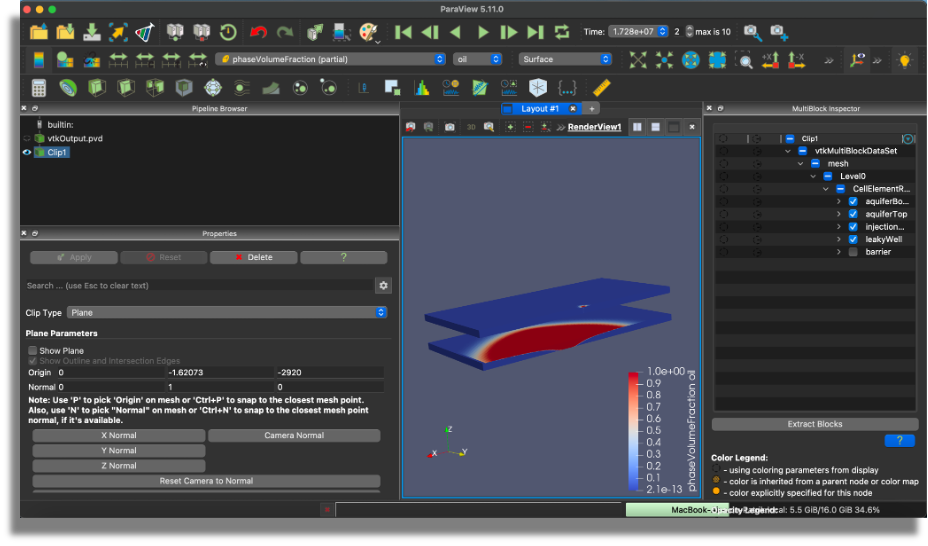

Simulation de GEOSX de la distribution de la pression des fluides dans un réservoir failé due à l’injection de CO2. GEOSX offre un cadre pour modéliser des processus complexes de flux et de géomécanique sur les architectures de calcul de nouvelle génération. Crédit : Données géologiques gracieusement fournies par le Gulf Coast Carbon Center.

La mission

Les étudiants travaillaient en équipes de 5. Le hackathon n’était pas une compétition entre les équipes, son objectif était de permettre aux étudiants de découvrir ce que signifiait être un ingénieur HPC et de les confronter aux mêmes types de problèmes et de défis auxquels ils seront confrontés lorsqu’ils travailleront avec un nouveau code sur une nouvelle plateforme HPC.

Ainsi, l’objectif était triple :

- Découvrir l’environnement AWS Cloud,

- Tester et évaluer les performances d’un cluster HPC basé sur ARM dans AWS, avec les processeurs de dernière génération AWS Graviton 3,

- Compiler et exécuter des simulations avec l’application GOESX.

Le hackathon s’est déroulé sur une semaine organisée comme suit :

- 1 jour d’introduction à l’écosystème ARM et à la plateforme AWS

- 3 jours de pratique

- Dernier jour pour la présentation des résultats

Même avec une période courte pour atteindre tous les objectifs, toutes les équipes ont très bien performé et ont accompli un travail formidable. Félicitations à tous.

Un cluster HPC ARM entièrement équipé sur AWS

Fournir une plateforme HPC aux étudiants a été facile : nous nous sommes appuyés sur notre offre CIAB (Cluster-in-a-Box) pour mettre en place un environnement HPC complet dans un compte AWS dédié. CIAB repose sur notre solution CCME (Cloud Cluster Made Easy) qui automatise le déploiement de clusters HPC sur AWS.

Pour le Hackathon, voici un aperçu général de ce qui a été déployé :

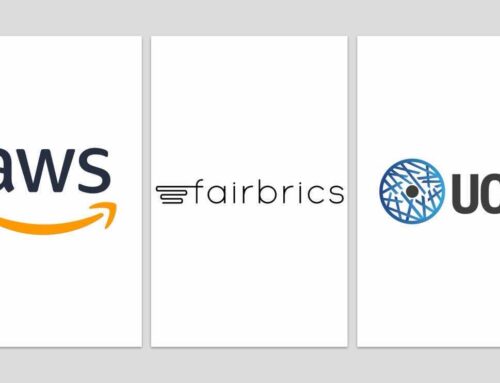

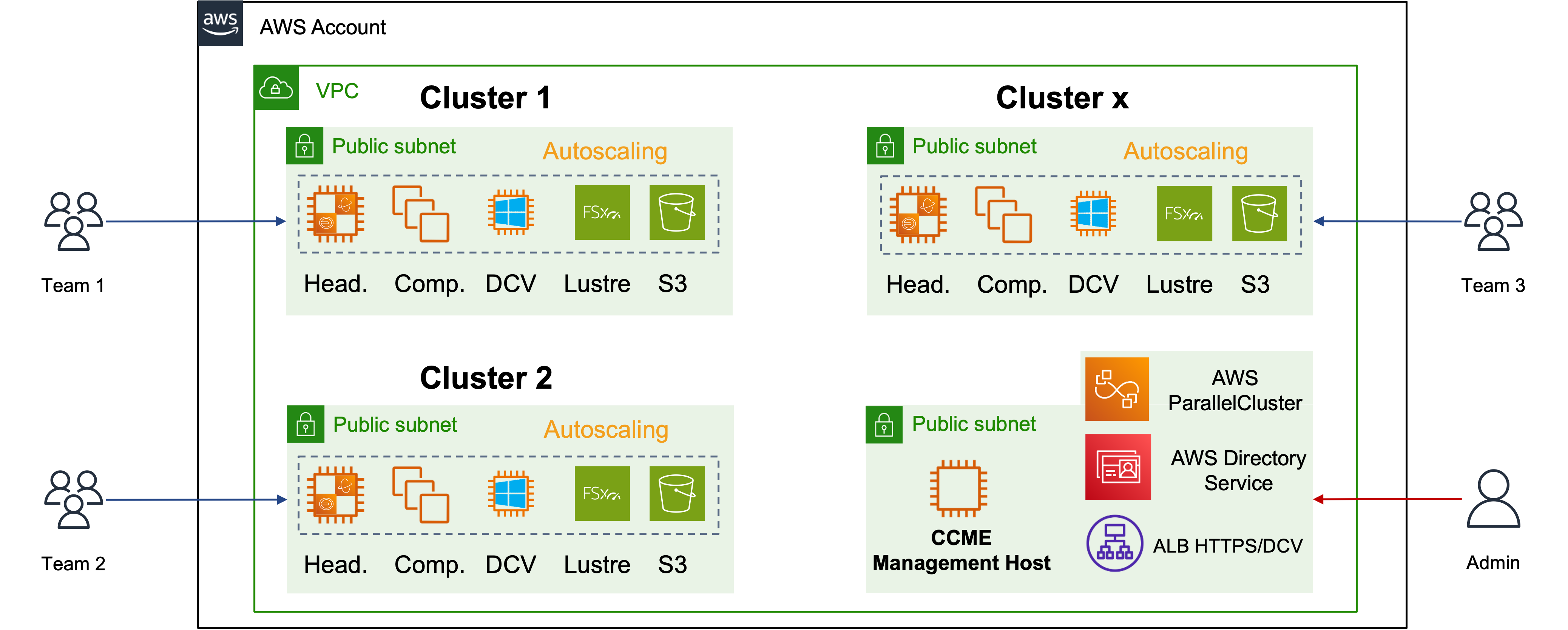

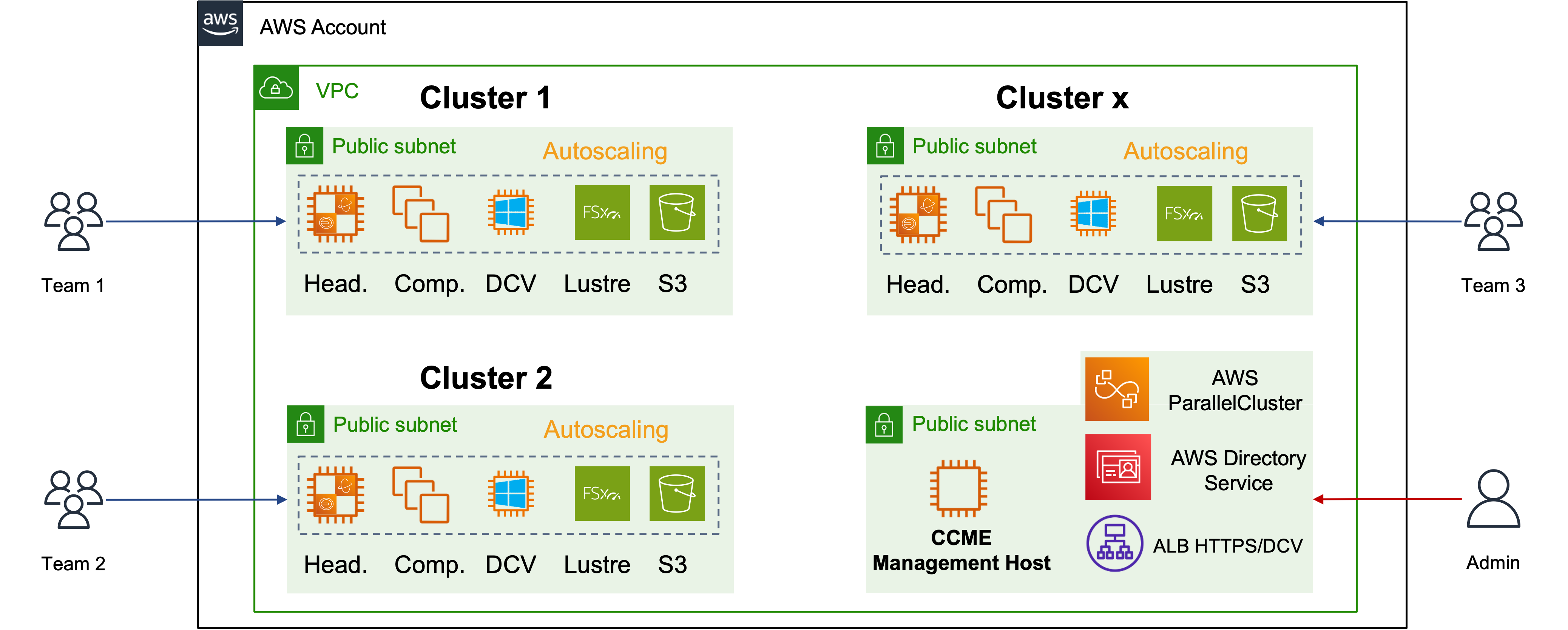

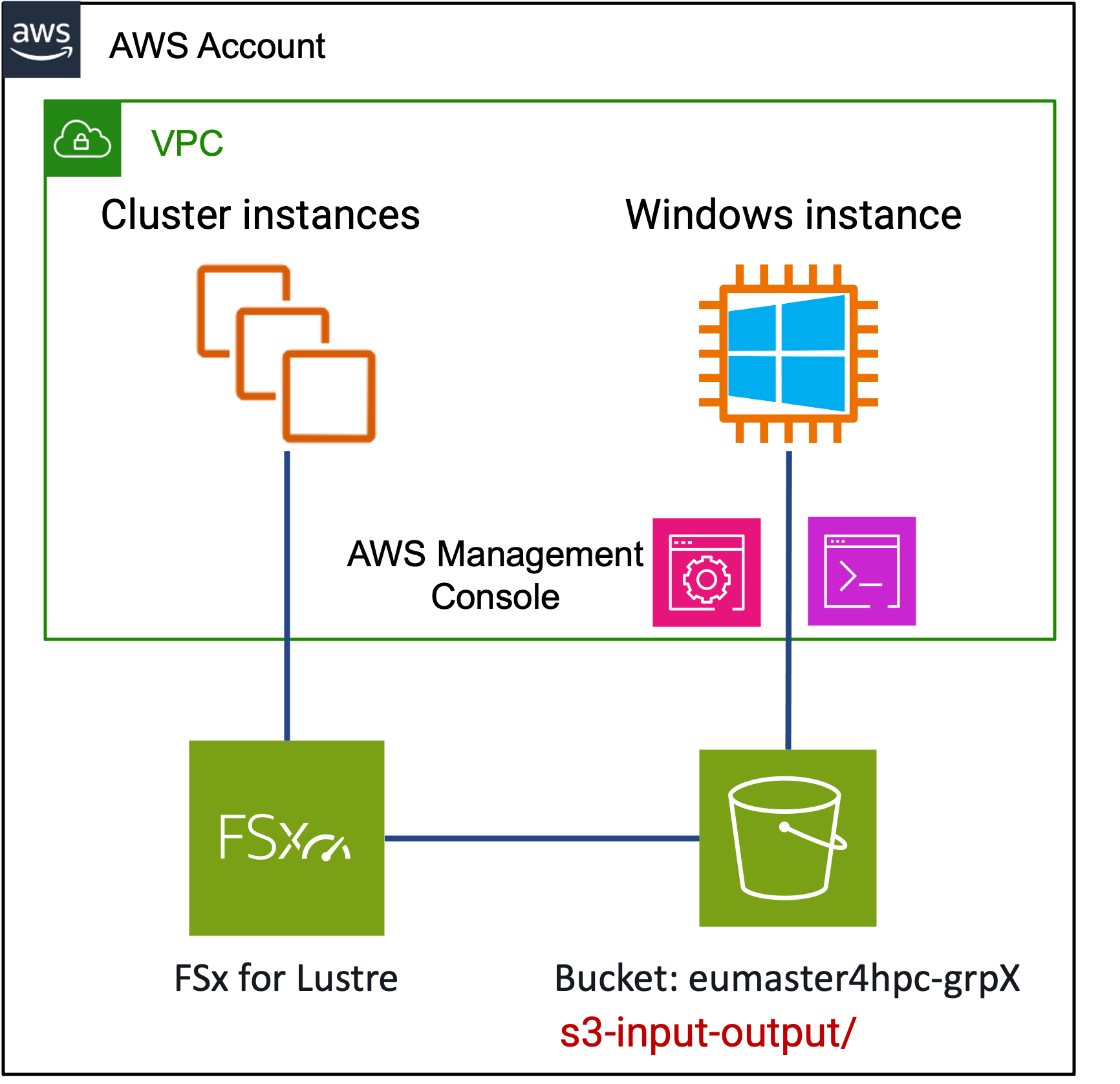

Architecture de haut niveau de la plateforme HPC du Hackathon déployée dans CIAB.

L’ensemble de la plateforme HPC a été déployé dans la région AWS North Virginia (us-east-1), afin de disposer d’une capacité hpc7g suffisante (nous avions créé une réservation de capacité avant le Hackathon, pour garantir la disponibilité des instances pendant la semaine).

Chaque cluster est entièrement isolé des autres (réseau, comptes…), donc même s’il ne s’agissait pas d’une compétition, les étudiants ne pouvaient pas accéder au cluster d’une autre équipe pour y interférer. Chaque cluster avait la configuration suivante :

- Scheduler : SLURM

- Nœud principal : fonctionnant sur une instance c7g.4xlarge (16 vCPU – AWS Graviton 3, 32 GiB)

- Nœuds de calcul : 10 instances hpc7g.16xlarge (64 vCPU – AWS Graviton 3, 128 GiB) (dynamiquement lancées lorsque nécessaire) étaient disponibles sur chaque cluster, fournissant jusqu’à 640 vCPU à l’équipe.

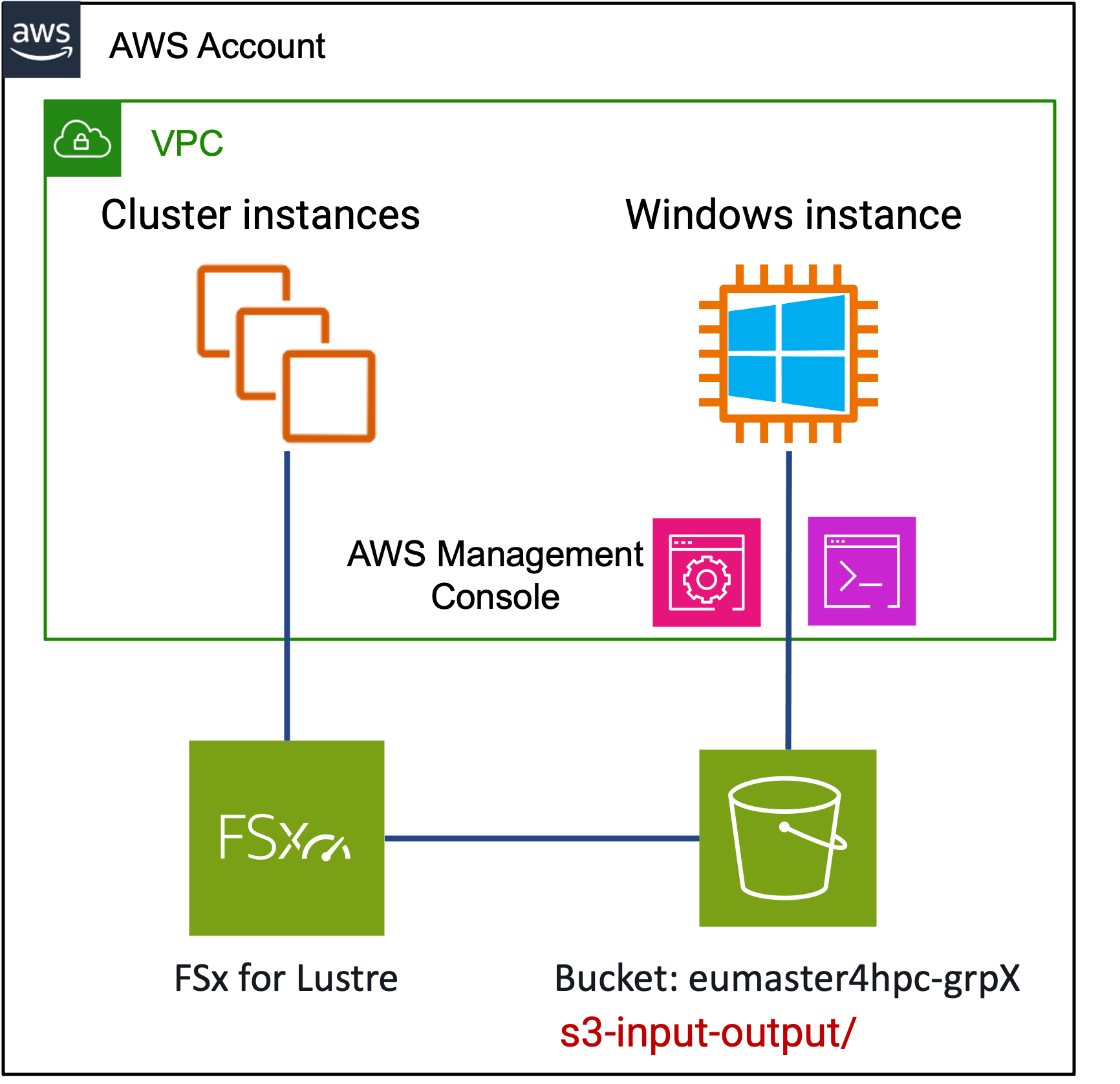

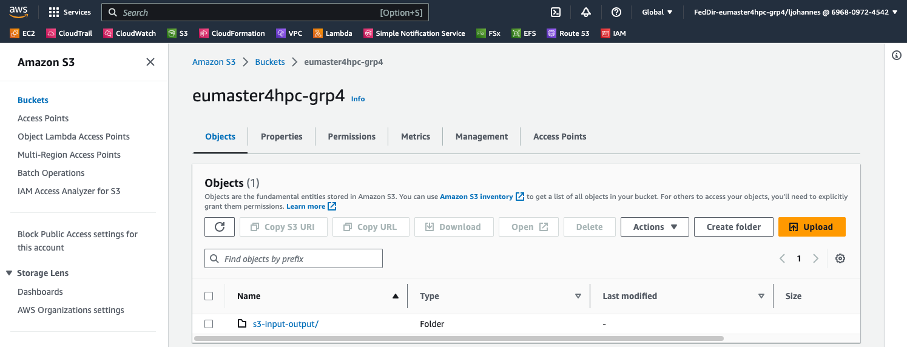

- Stockage : un NFS partagé prend en charge les répertoires personnels avec seulement 500 GiB, complété par un système de fichiers parallèle : FSx for Lustre de 1,2 TiB en tant que stockage temporaire. Le FSx for Lustre était également synchronisé avec un S3 Bucket pour partager des fichiers entre le cluster HPC et les postes de travail Windows pour le post-traitement des résultats.

Poste de travail Windows pour le post-traitement des résultats de GEOSX.

- Poste de travail Windows : en plus de chaque cluster, un poste de travail Windows fonctionnant sur une instance g4dn.xlarge (4vCPUs, 16 Go de RAM et une GPU NVIDIA T4) était disponible et accessible via des sessions DCV.

Les étudiants avaient accès au cluster par des moyens classiques : une connexion SSH au nœud frontal, un portail web (EnginFrame) pour lancer des sessions DCV sur l’instance Windows, et enfin, ils avaient également accès à la console AWS pour interagir avec le compartiment S3 dédié à leur équipe (pour des raisons de sécurité, ils n’avaient accès qu’au compartiment de leur équipe et à aucun autre service).

Exemple de post-traitement d’une simulation GEOSX sur le cas de test isoThermalLeakyWell obtenu par l’une des équipes.

Un bucket S3 dédié par équipe, synchronisé avec FSx for Lustre.

Les administrateurs et les enseignants avaient accès à tous les clusters pour aider les étudiants en cas de besoin.

La plateforme entière était gérée de manière centralisée par UCit grâce à Cluster-in-a-Box (CIAB) : depuis le déploiement initial juste avant le début du hackathon, la gestion des équipes dans un annuaire Active Directory centralisé, jusqu’à la suppression de tous les services et le nettoyage de la plateforme. Tout cela est totalement transparent à la fois pour les étudiants et les enseignants, et a été réalisé très rapidement et facilement du côté d’UCit avec un ensemble de fichiers de configuration et l’automatisation de CIAB.

Comment les clusters ont-ils été utilisés ?

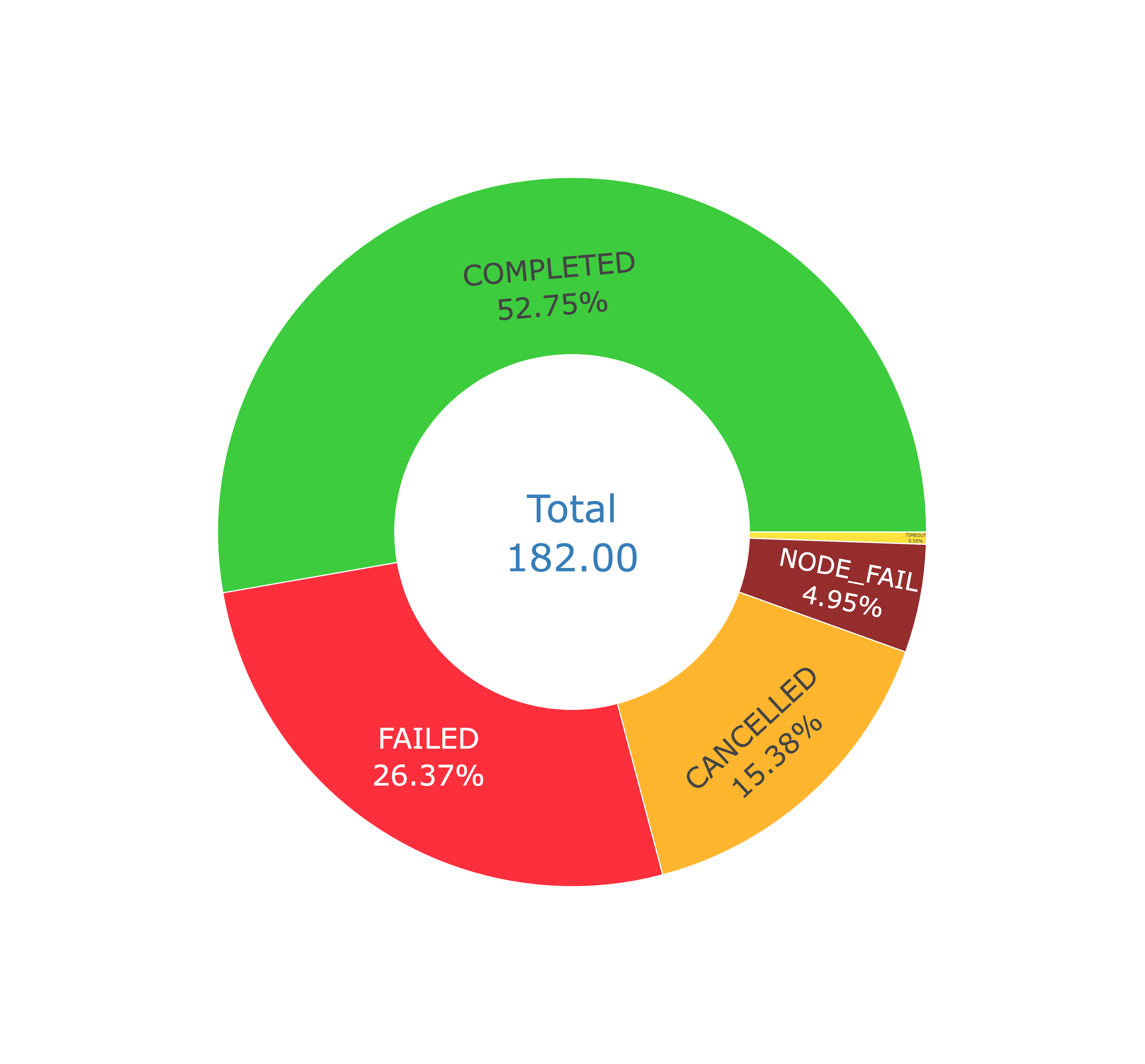

Puisque les clusters ont été déployés avec CCME, nous avons automatiquement accès aux informations comptables de tous les travaux que les étudiants ont soumis. Bien que l’objectif du Hackathon ne se limitait pas à l’exécution de simulations (le plus gros travail a porté sur la création de références pour les nœuds et la compilation de GEOSX), il est toujours intéressant de comprendre comment les ressources fournies ont été utilisées.

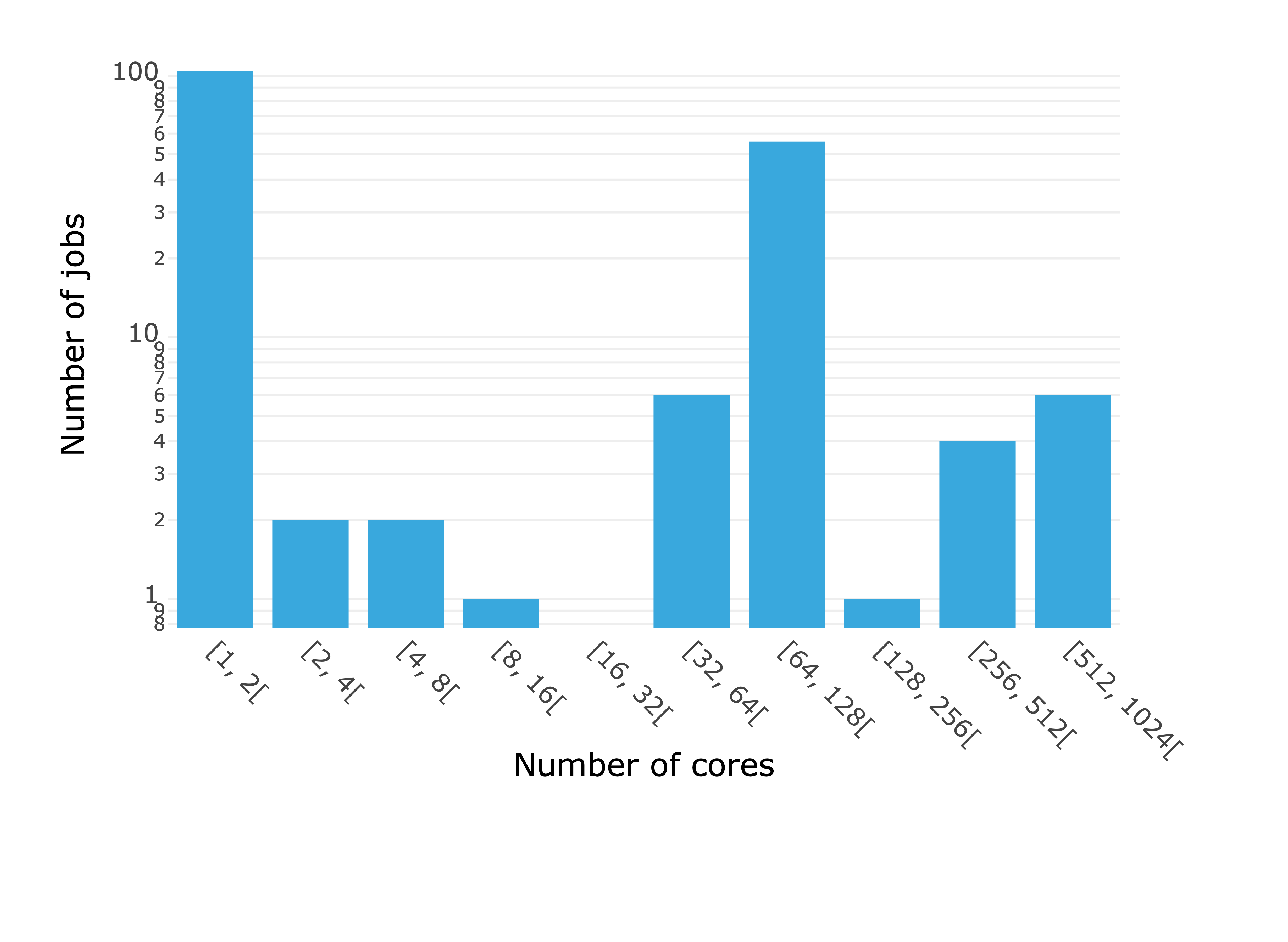

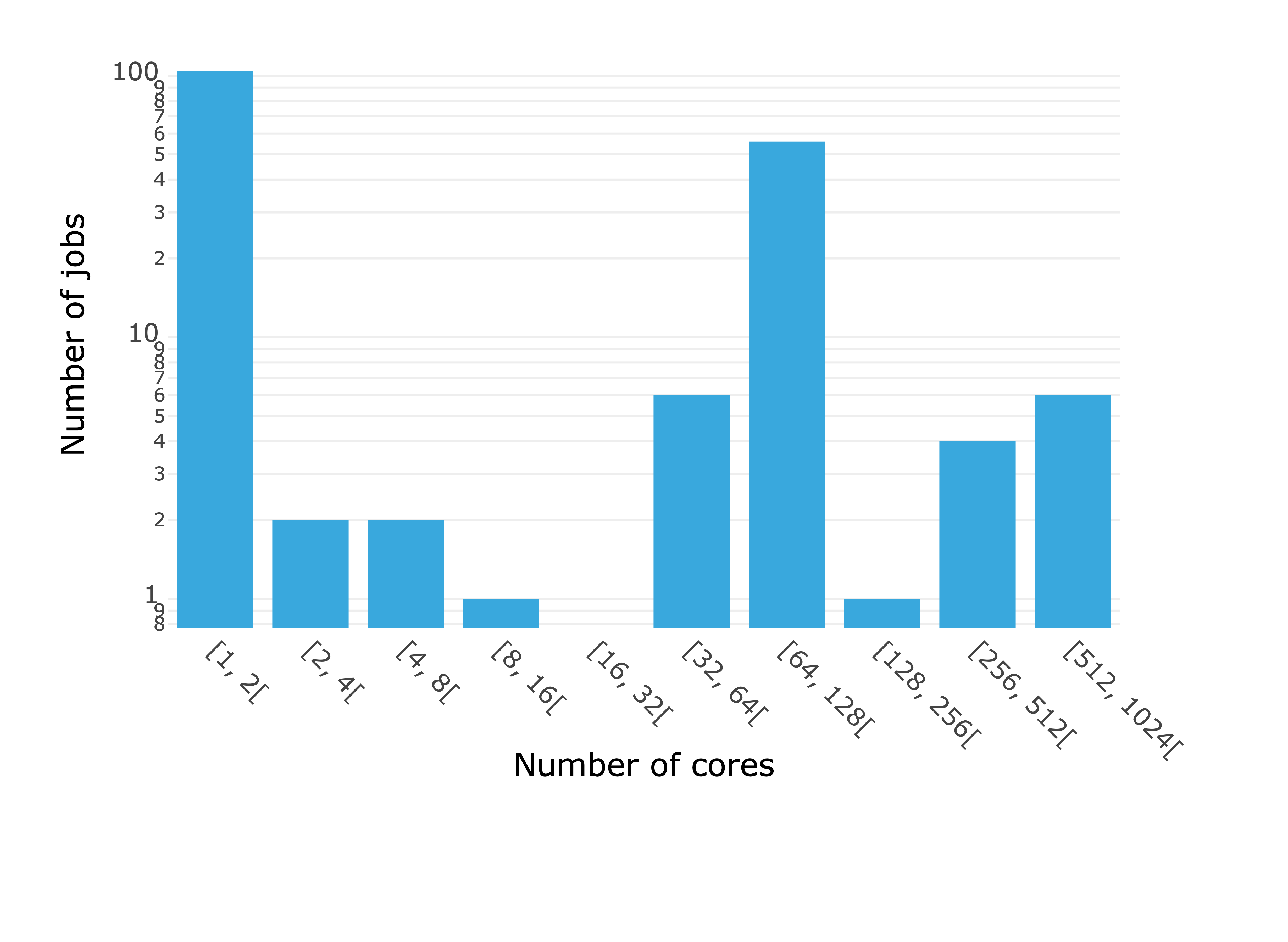

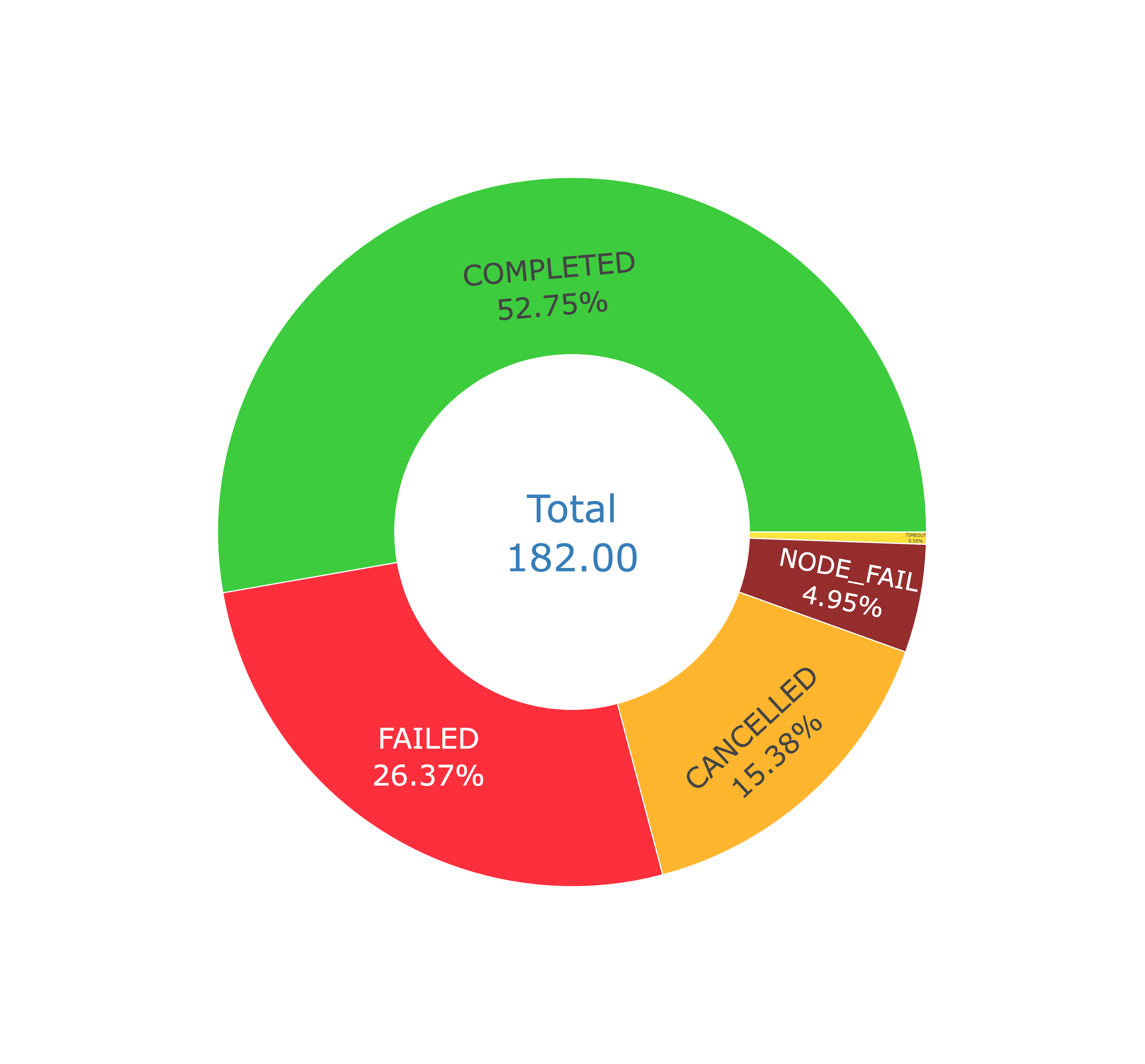

CCME collecte automatiquement les journaux de comptabilité Slurm, que nous pouvons ensuite intégrer dans OKA (consultez notre autre publication à ce sujet pour plus de détails), notre plateforme de science des données pour les clusters HPC. Toutes les équipes ont réussi à exécuter des simulations GEOSX sur plusieurs nœuds, essayant de les faire passer de 2 à 10 nœuds. La plupart des travaux ont été soumis le dernier jour de la session pratique, car la majeure partie du travail initial consistait à créer des références pour une instance hpc7g (en utilisant HPL, Stream et HPCG), puis à compiler GEOSX.

Exemple pour l’une des équipes : allocation de cœurs et nombre de travaux par statut de travail visualisés dans la suite OKA.

Dans l’ensemble, toutes les ressources disponibles n’ont pas été utilisées. C’est prévu dans un environnement non productif et assez courant dans un environnement de formation. C’est là que l’auto-ajustement des ressources de calcul est important : les instances ne sont lancées que lorsque c’est nécessaire et arrêtées après une courte période d’inactivité lorsque l’instance est inactive (aucun travail n’est en cours). En fin de compte, grâce à CCME et CIAB, vous ne payez que ce que vous consommez.

Les facteurs de succès de ce Hackathon

Le succès de l’édition 2023 peut être attribué à plusieurs facteurs clés.

- L’engagement enthousiaste et positif des étudiants a joué un rôle essentiel. Leur passion pour le HPC et leur volonté de collaborer et de remettre en question les idées ont créé une atmosphère dynamique et productive.

- L’implication active d’AWS et de ses partenaires a également contribué de manière significative aux réalisations de l’événement. AWS a fourni des ressources HPC essentielles, tandis qu’ARM a offert des conseils précieux sur le processus de portage, en intégrant des outils tels qu’ACFL (ARM Compiler for Linux) et ARMPL (ARM Performance Libraries). NVIDIA et SiPearl ont partagé leur expertise matérielle inestimable, et TotalEnergies a apporté sa profonde connaissance de GEOSX.

- Un autre facteur de succès critique a été l’utilisation de solutions efficaces d’instanciation et d’administration HPC qui ont facilité l’accès transparent aux ressources HPC pour les étudiants et la surveillance facile pour les équipes administratives tout au long de l’événement :

- Instanciation efficace de clusters avec Cluster-in-a-Box (CIAB) et Cloud Cluster Made Easy (CCME)

- Accès à distance transparent aux machines avec les solutions EnginFrame et DCV de NICE

- Analyse détaillée de l’utilisation des ressources avec la OKA Suite

Ces solutions administrées par UCit ont joué un rôle essentiel non seulement dans la rationalisation de l’allocation et de la surveillance des ressources, mais aussi dans l’optimisation de l’utilisation des ressources, garantissant que les étudiants puissent tirer le meilleur parti de leur expérience HPC. Collectivement, ces facteurs ont créé un environnement de collaboration, d’apprentissage et d’innovation qui a défini le succès du Hackathon 2023.

Remerciements

Une sincère appréciation va à Kevin Tuil (AWS), Conrad Hillairet (ARM), Benjamin Depardon, Jorik Remy (UCit) et Francois Hamon (TotalEnergies) pour leurs efforts exceptionnels dans l’orchestration de l’infrastructure et la sécurisation de la portabilité du code GEOS(X) vers les instances AWS EC2 Hpc7g, ainsi que pour leur soutien inestimable aux équipes d’étudiants participantes. Cet événement de l’école d’été, dirigé par Ronan Guivarch (professeur à Toulouse INP), Arnaud Renard (directeur du centre de calcul ROMEO), Christophe Picard (professeur à Grenoble INP) et Stéphane Labbé (directeur de SUMMIT à Sorbonne Universités), a reçu un soutien substantiel d’un écosystème diversifié. La gratitude s’étend à AWS, ainsi qu’à ses réseaux de partenaires HPC, notamment NVIDIA (représenté par Gunter Roeth), UCit, Arm et SiPearl, pour leur soutien enthousiaste. L’implication active de François Hamon de TotalEnergies a ajouté une immense valeur à cette entreprise.